I’ve noticed a new trend among the chattering classes of online AI guy hypebeast. Their current stage of grief over AGI not arriving or having very little to show in the way of ROI for their LLMs has shifted to blaming the users. To them, it’s not that AI is not working as they intended; it’s the people who are too stupid to understand how to use it.

I can no longer find the tweet, but one OpenAI researcher basically said “the only reason it seems like AI is hitting a wall now” (a notable omission even if rhetorical) “is because the frontier models are simply too sophisticated for the layperson to understand how to operate.” The hard core partisans are apparently giddy over what has been achieved internally in exceedingly narrow use cases, but the unwashed masses are still to dim to be able to prompt. Oh brother.

Then there’s this tweet from Nikunj Kothari who essentially shifts the blame of slow AI adoption in business on to the humans who are resistant.

I am old enough to remember the old Silicon Valley dictum that if you’re not talking to users and you’re not solving an unmet need, then your startup is dead on arrival. Funny thing is, I actually believe this techno-gospel. I’ve lived this out and found it to be true. Good user research is gold. You must be led by the user’s needs, no matter how willing or unwilling they are to change their behavior. Their problem should take precedence, not your purely technical innovation.

GenAI is acutely impressive for its autocomplete prediction of the next most likely word in a response to an LLM prompt, but it’s perhaps alone in being a technology that is so thoroughly celebrated without any meaningful or disciplined user research basis to exist.

I tried my best to expound on the 7 Types of AI user, some of which have borne out in the time that has passed. And while it’s a cliche that Silicon Valley tech is a hammer looking for a nail, in this case the massive expenditures of time and resources, not to mention the AI hype that portends an upheaval of human nature and our most basic institutions, seemly wholly unsubstantiated by the lack of viable, continuous use cases.

After the initial technical shock of seeing how loosely “smart” some of the responses have been, LLMs still haven’t gotten over hallucinations. What’s worse, they open ended nature of the prompt is a massive hurdle. Just unleashing co-pilot on a massive organization without specific, pre-defined use cases is resulting in chaos. Raising 200M for a vibe coding app that demos beautifully but fails to retain users after a few months is a recipe for disaster. Right now AI is fun parlor trick; it’s closer to personal tool than anything that will be deployed reliably enough to do the kind of economic shifting that its investors are betting it will. There are just far too many startups rushing into an unproven customer painpoint. At this point, the funding of these startups is less sound calculation that user growth will sustain and more like speculative wishful thinking that *waives hands* “AI is the future.” GenAI is an `universal` tool desperately seeking a `particular` use case. Particular use cases are much more powerful.

I was chatty on notes this week. Here are some of the longer ones in case you missed them.

On Slop

Slop is a lack of:

intent

precision

decisions

care

conviction

transparency in production

There is a common misconception on Slop. Slop may have “existed” prior to AI, but I argue that AI meaningfully redefined the pre-slop cultural tendencies (kitsch?) in the way AI labs engineered a use case for creation precisely lacking these qualities.

Secondary definition, which I think is integral to the contemporary idea of Slop: it must have loosely aggregated training data on which it “optimizes.” The [training data] + [model] + [prompt] equation is net new to us and thus constitutes a marker for AI slop.

There are only a few technical media precedents for this, and they are flimsy, and thus it’s why the argument that “we have always been slopping”, to me, fails to account for the urgency of the term.

On AI in the Workplace

About 90% of the “AI in the workplace” hype comes from three groups:

Bag holders: those with direct financial stakes in AI companies.

Heads-down ML researchers: brilliant but often too deep in the weeds to see the broader picture.

Semi-professional hypebeasts who ride the grift orthogonally for unexplored psychological coping reasons.

What most people in these groups fail to grasp is how AI is perceived by those with normal, real jobs (both middle managers and individual contributors). These professionals see a clear tradeoff between quality and efficiency. The models just aren’t there yet. See: “WorkSlop”

I constantly see pitches like: “An exec spent 4 months and $500K on user research that AI did in a week.” or “Consulting is cooked: I made a BCG-style deck in 3 minutes with a prompt.”

These takes miss the point, and it’s getting harder to ignore given the market caps. At the biggest and most important corporate level, there’s always time and budget for these efforts because getting it right matters. Real insights come from real people. Cutting corners with AI leads to statistically plausible but ultimately shallow results based on messy inputs.

This is my number one reason for calling the top. The bubble won’t burst because AI fails to improve, it’ll burst because the market for it won’t arrive fast enough. Spend a week inside a large organization and you’ll see just how badly the AI boosters have misread how work actually gets done.

Reminder: the rough transcript of my talk at MIT is on Substack

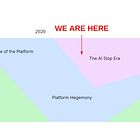

This talk weaves together a number of topics for the incredible audience that was there in person. Main takeaways:

The reason we got here is due to a monstrous ideological capture of “technology” not “technology” in itself

The coming AI Slop era will give rise to a new kind of post-computational institution

Building these new institutions requires defining the virtues of the institution and adapting towards “intra-institutional” technology